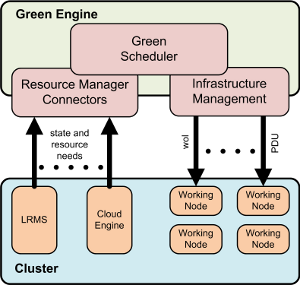

CLUES Architecture

CLUES presents a completely modular architecture with the main objective of making it easily adaptable to practically any cluster management system, and also enabling integration with practically any infrastructure.

The result of the design can be seen in the following figure, which shows the main components of CLUES.

The architecture consists of a main component, the CLUES Engine, which makes use of a set of connectors with the cluster managers and a set of mechanisms to power on and off the computing nodes.

All these components require a single installation in the front-end node which manages the cluster.

Connectors with management middleware

These connectors have two main tasks: capturing job submissions and monitoring the use of nodes by management middleware.

Capturing job submissions

Many cluster managers (such as Torque/PBS or OpenNebula) incorporate mechanisms enabling to perform arbitrary actions in the moment a job enters their scheduler queue. This is a very suitable way to intercept jobs since it enables the integration of the management middleware with CLUES following its own architecture.

In the case these mechanisms are not available, it is always possible to wrap the actual job submission by an application or script, which will integrate the middleware with CLUES and then will make the call for the actual job submission.

As an advanced aspect, it is possible to develop specific schedulers that communicate with CLUES by means of its API, and make requests for resources explicitly. Although this approach can lead to better management, one can always follow the previous approaches so as not to modify underlying management systems.

Monitoring

Practically every cluster management middleware provides commands to check the state of the working nodes (e.g. the command "pbsnodes" in the case of Torque, or the commands "onehost" and "onevm" in the case of OpenNebula). Thus, we can make use of those commands to communicate to CLUES the infrastructure usage state from the point of view of a particular subsystem.

In order to get more accurate state information, for some systems such as OpenNebula one can easily develop applications that, using OpenNebula's own API, can provide more updated and extensive information.

Integration with the infrastructure

Not all clusters are the same, if we take into account the operating system (Windows, Linux, etc), or the availability of different remote power-on mechanisms, such as Wake-on-LAN (that enables switching on a computer by sending information to its network card) or systems based on Power Device Units (that enable to selectively provide energy to particular computing nodes).

The plug-ins for integration with the infrastructure can cope with this diversity, making it possible to use customized commands in order to power on or off the computers by using the specific mechanisms of our cluster.

Hooks

In addition to the above mentioned components, CLUES incorporates a very complete mechanism of hooks that enables to perform specific actions for each cluster management subsystem, whenever an action relevant to the cluster state takes place (e.g. switching on or off a node, detection of a node unexpectedly switched on or off, etc).

Contacto: +34963877023, Fax: +34963877274

legal note